Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

Por um escritor misterioso

Last updated 21 fevereiro 2025

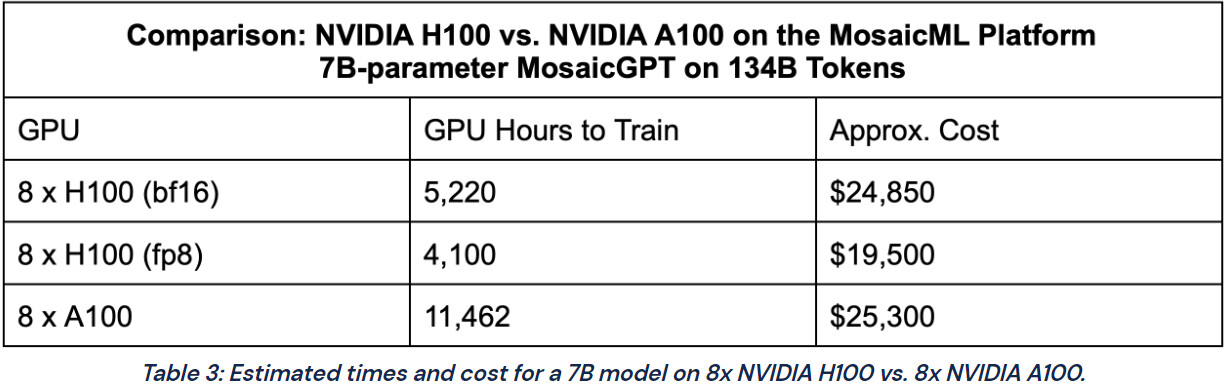

OGAWA, Tadashi on X: => Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave, Part 1. Apr 27, 2023 H100 vs A100 BF16: 3.2x Bandwidth: 1.6x GPT training BF16: 2.2x (

Beating SOTA Inference Performance on NVIDIA GPUs with GPUNet

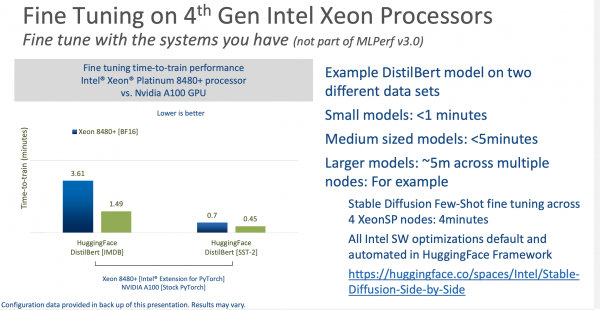

Intel and Nvidia Square Off in GPT-3 Time Trials - IEEE Spectrum

MLPerf Training 3.0 Showcases LLM; Nvidia Dominates, Intel/Habana Also Impress

Hagay Lupesko on LinkedIn: Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave…

Deploying GPT-J and T5 with NVIDIA Triton Inference Server

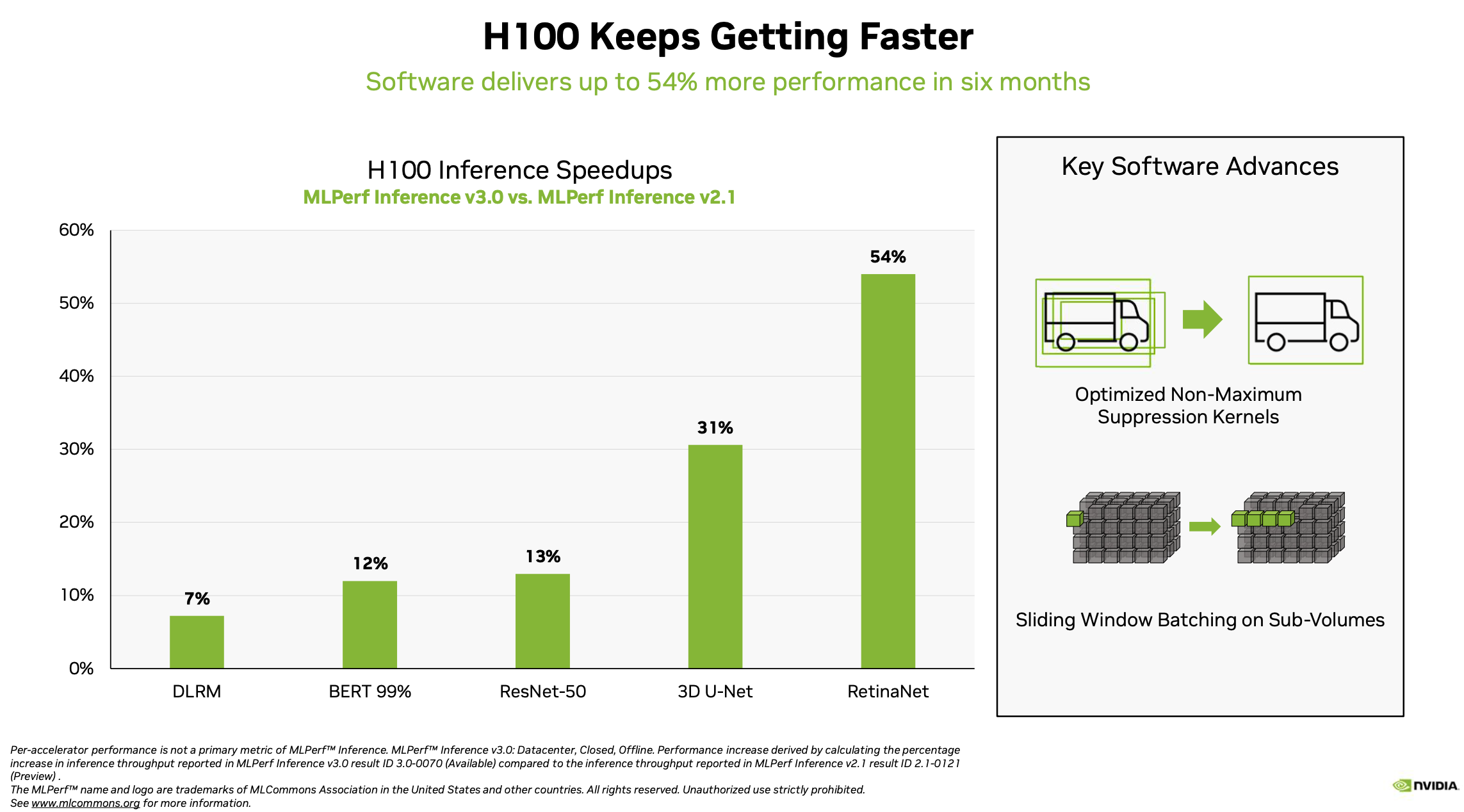

H100 GPUs Set Standard for Gen AI in Debut MLPerf Benchmark

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

Max Cohen on LinkedIn: CoreWeave Secures $100M to Expand NVIDIA HGX H100 GPU Offering

Full-Stack Innovation Fuels Highest MLPerf Inference 2.1 Results for NVIDIA

NVIDIA H100 Compared to A100 for Training GPT Large Language Models

Optimizing Inference on Large Language Models with NVIDIA TensorRT-LLM, Now Publicly Available

Efficiently Scale LLM Training Across a Large GPU Cluster with Alpa and Ray

Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

GPUs NVIDIA H100 definem padrão para IA generativa no primeiro benchmark MLPerf

Recomendado para você

-

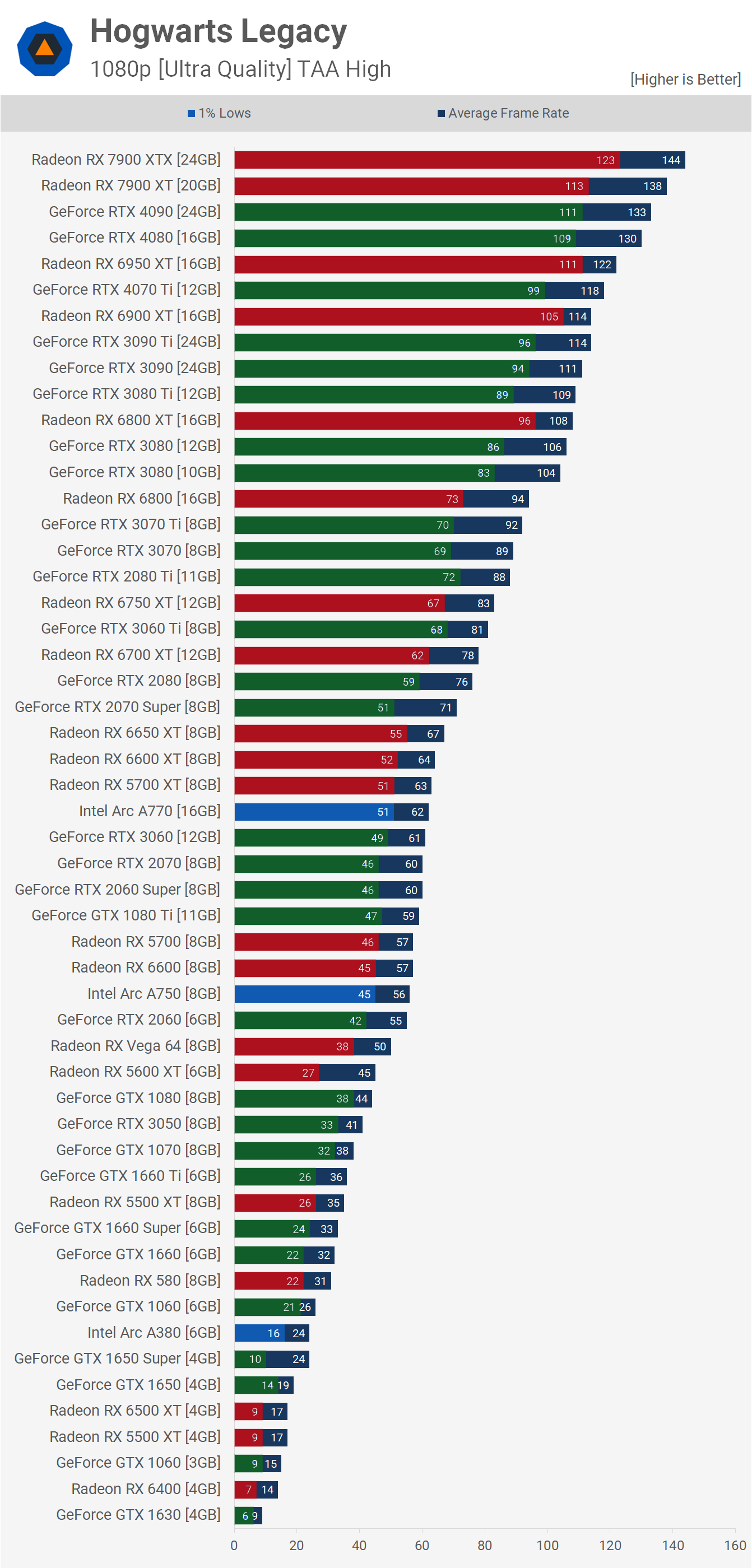

Hogwarts Legacy GPU Benchmark: 53 GPUs Tested21 fevereiro 2025

Hogwarts Legacy GPU Benchmark: 53 GPUs Tested21 fevereiro 2025 -

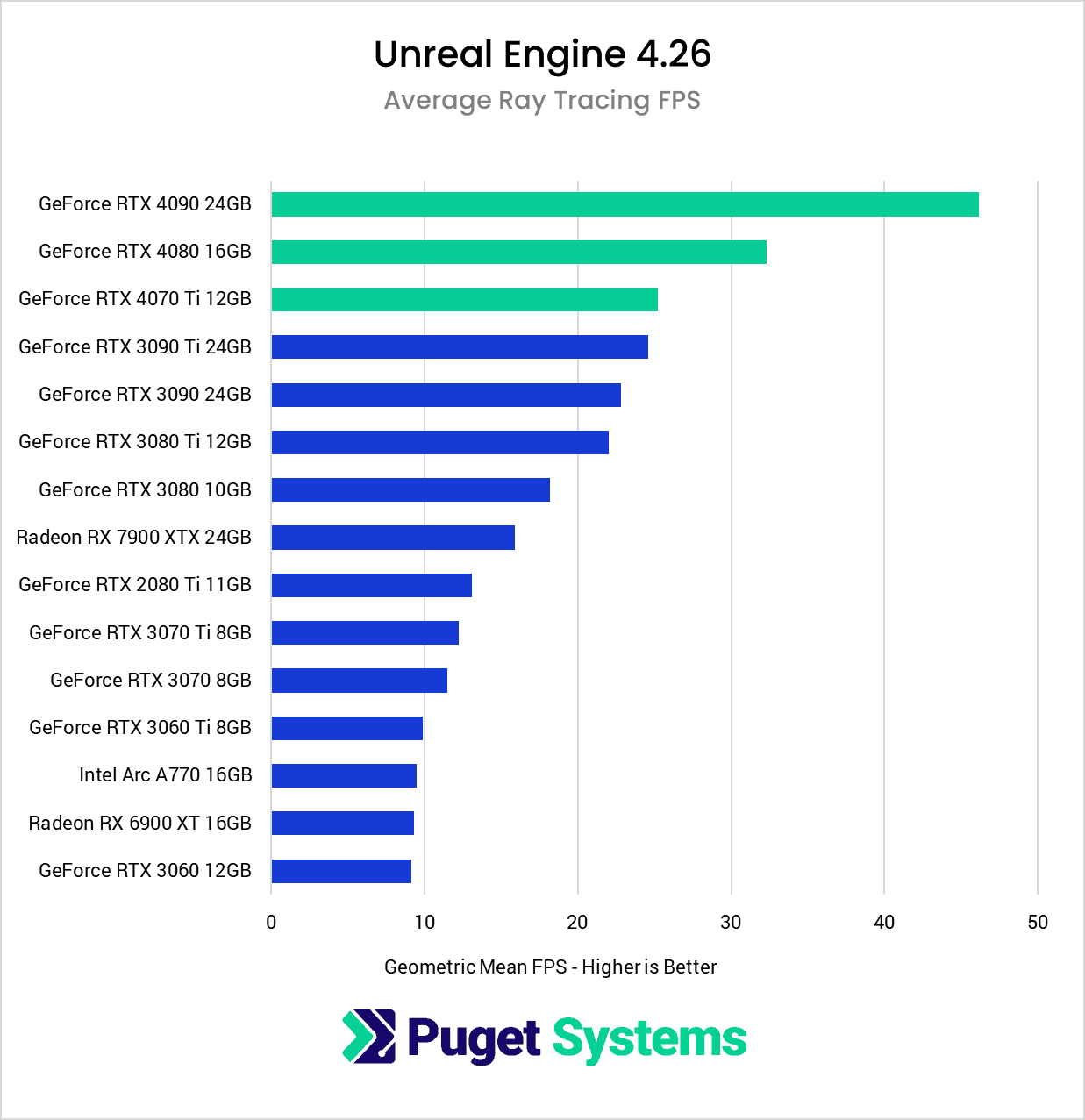

Unreal Engine: NVIDIA GeForce RTX 40 Series Performance21 fevereiro 2025

Unreal Engine: NVIDIA GeForce RTX 40 Series Performance21 fevereiro 2025 -

Nvidia RTX 5000 release date, specs, price and benchmark rumors21 fevereiro 2025

Nvidia RTX 5000 release date, specs, price and benchmark rumors21 fevereiro 2025 -

GPU Performance/Price & Performance/Consumption Indexes January 2023 - intel post - Imgur21 fevereiro 2025

GPU Performance/Price & Performance/Consumption Indexes January 2023 - intel post - Imgur21 fevereiro 2025 -

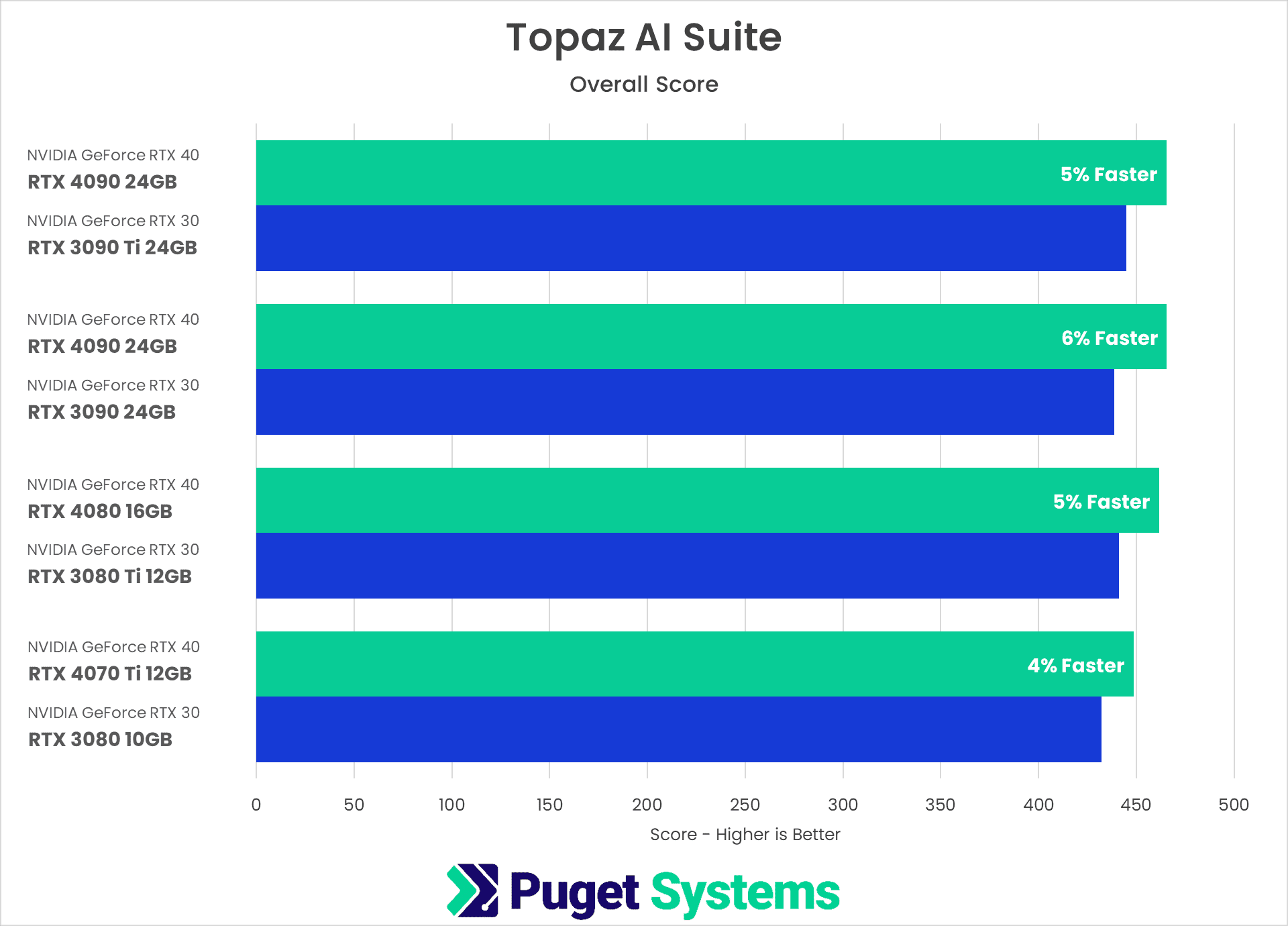

Topaz AI Suite: NVIDIA GeForce RTX 40 Series Performance21 fevereiro 2025

Topaz AI Suite: NVIDIA GeForce RTX 40 Series Performance21 fevereiro 2025 -

Best GPUs to Buy for 1440p Gaming in 2023 – Our Top Picks - GeekaWhat21 fevereiro 2025

Best GPUs to Buy for 1440p Gaming in 2023 – Our Top Picks - GeekaWhat21 fevereiro 2025 -

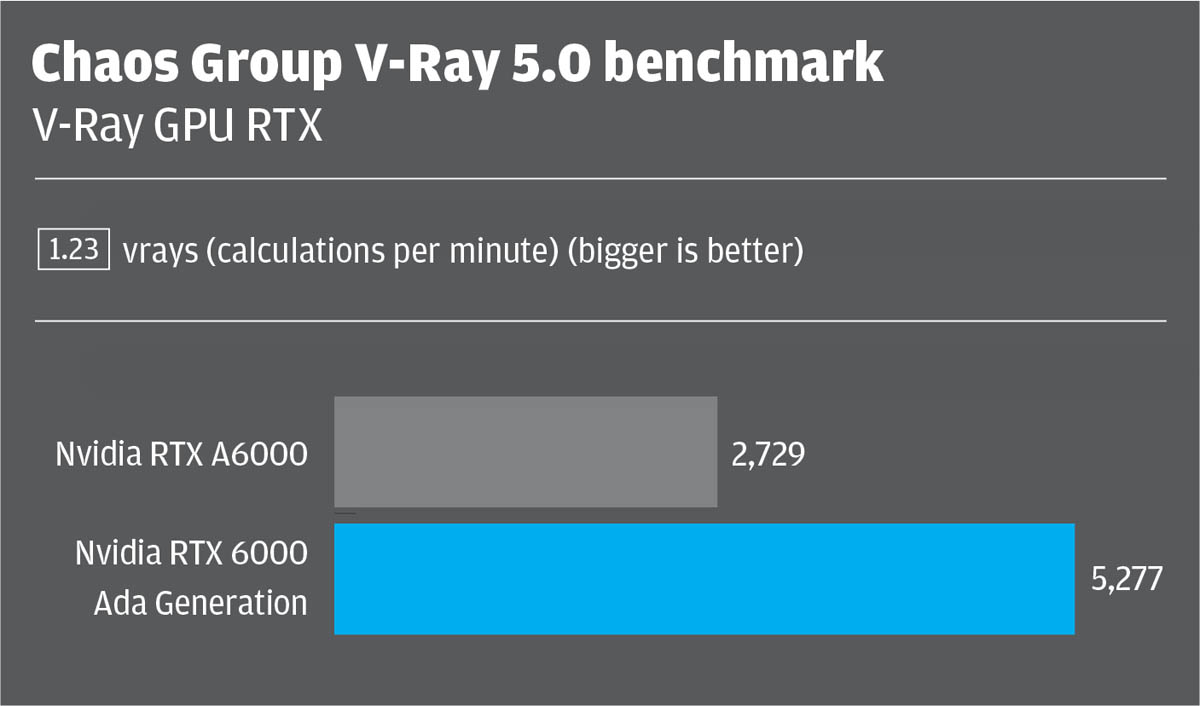

Review: Nvidia RTX 6000 Ada Generation - AEC Magazine21 fevereiro 2025

Review: Nvidia RTX 6000 Ada Generation - AEC Magazine21 fevereiro 2025 -

Best GPUs in 2023: Our top graphics card picks21 fevereiro 2025

Best GPUs in 2023: Our top graphics card picks21 fevereiro 2025 -

Top 2023 GPU Picks for Ultimate Performance21 fevereiro 2025

Top 2023 GPU Picks for Ultimate Performance21 fevereiro 2025 -

Best Graphics Card 2023: Top rated GPUs for every build and budget21 fevereiro 2025

Best Graphics Card 2023: Top rated GPUs for every build and budget21 fevereiro 2025

você pode gostar

-

gavinreed game pixel art indie game gavin reed GIF Pixel art games, Cool pixel art, Pixel art tutorial21 fevereiro 2025

gavinreed game pixel art indie game gavin reed GIF Pixel art games, Cool pixel art, Pixel art tutorial21 fevereiro 2025 -

It's literally over for roblox blox fruit hackers and the roblox21 fevereiro 2025

-

Fantastic first lines from books21 fevereiro 2025

Fantastic first lines from books21 fevereiro 2025 -

Life Of A King Official Trailer #1 (2014) - Cuba Gooding Jr., Dennis Haysbert Movie HD21 fevereiro 2025

Life Of A King Official Trailer #1 (2014) - Cuba Gooding Jr., Dennis Haysbert Movie HD21 fevereiro 2025 -

Como Resolver O Mistério Crime Que Resolve O Jogo - Detetive Games Online Do Jogo Sócios Dos Investigador Dos Detetives Dos Pares Foto de Stock - Imagem de crime, investigue: 11951633421 fevereiro 2025

Como Resolver O Mistério Crime Que Resolve O Jogo - Detetive Games Online Do Jogo Sócios Dos Investigador Dos Detetives Dos Pares Foto de Stock - Imagem de crime, investigue: 11951633421 fevereiro 2025 -

Hitoribocchi no Marumaru Seikatsu Episode 10 Discussion (6021 fevereiro 2025

-

Brinquedo Pokemon Cinto Com Pokebola E Growlithe Sunny 2607 na21 fevereiro 2025

Brinquedo Pokemon Cinto Com Pokebola E Growlithe Sunny 2607 na21 fevereiro 2025 -

GuliKit will make DualSense Edge Hall Effect analogue stick21 fevereiro 2025

GuliKit will make DualSense Edge Hall Effect analogue stick21 fevereiro 2025 -

Demon Slayer: Você conseguiria sobreviver se fosse um Matador de Demônios?21 fevereiro 2025

Demon Slayer: Você conseguiria sobreviver se fosse um Matador de Demônios?21 fevereiro 2025 -

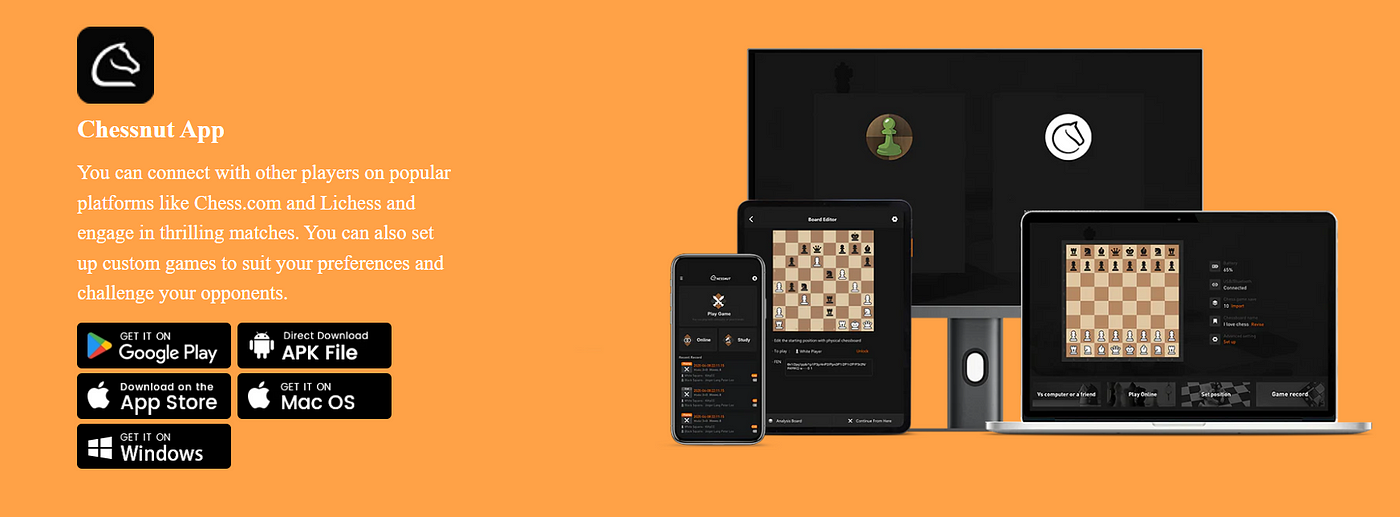

Mastering the Art of Strategy: Play Chess Online, by Chessnut, Oct, 202321 fevereiro 2025

Mastering the Art of Strategy: Play Chess Online, by Chessnut, Oct, 202321 fevereiro 2025